Tuesday 7 March, 2023

No shortage of vegetables

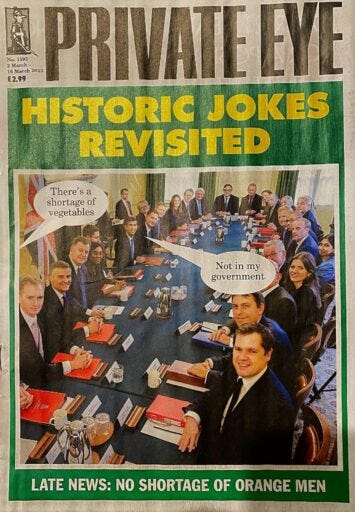

From Private Eye (Which God Preserve).

The magazine is having a hard time at the moment, not because of circulation problems (on the contrary), but because finding ways of satirising the UK’s shambolic ‘government’ is a daunting task.

Quote of the Day

”The United Nations cannot do anything and never could. It is not an inanimate entity or agent. It is a place, a forum, and a shrine — a place to which powerful people can repair when they are fearful about the course on which their own rhetoric seems to be propelling them.”

Conor Cruise O’Brien

(Who was once my Editor-in-Chief at the Observer, and had earlier been the UN’s High Representative in Katanga at the height of the Congolese civil war.)

Musical alternative to the morning’s radio news

Stanley Jordan | Eleanor Rigby | Newport Jazz Festival (1986)

Wonder what Paul McCartney thought of it.

Long Read of the Day

Can any of the companies working on ‘Generative AI’ be trusted?

Recently OpenAI, the outfit behind ChatGPT and the GPT Large Language Models (LLMs), published an odd manifesto-like essay with the title Planning for AGI and beyond (‘AGI’ meaning artificial general intelligence — i.e. superintelligence). Implicit in this is the standard delusion of all the machine-learning evangelists, namely that more and more powerful machine-learning systems will eventually get us to super intelligent machines. I think this is baloney, but we will let that pass. What’s interesting is that OpenAI appears to believe it.

The document has an overly pious air. “Our mission,” it bleats, “is to ensure that artificial general intelligence—AI systems that are generally smarter than humans—benefits all of humanity”. It then sets out three principles “we care about most”. They are:

We want AGI to empower humanity to maximally flourish in the universe. We don’t expect the future to be an unqualified utopia, but we want to maximize the good and minimize the bad, and for AGI to be an amplifier of humanity.

We want the benefits of, access to, and governance of AGI to be widely and fairly shared.

We want to successfully navigate massive risks. In confronting these risks, we acknowledge that what seems right in theory often plays out more strangely than expected in practice. We believe we have to continuously learn and adapt by deploying less powerful versions of the technology in order to minimize “one shot to get it right” scenarios.

On the principle that one should never give a tech company an even break, I am suspicious of this stuff. So I thought I’d go see what Scott Alexander, a fairer-minded (and more erudite) observer made of it. And it turns out that he had already composed a long, long and exceedingly thoughtful blog post about it. Which is why I’m recommending that you brew some coffee and pull up a chair to ponder it.

This is what he concludes at the end:

What We’re Going To Do Now

Realistically we’re going to thank them profusely for their extremely good statement, then cross our fingers really hard that they’re telling the truth.

OpenAI has unilaterally offered to destroy the world a bit less than they were doing before. They’ve voluntarily added things that look like commitments – some enforceable in the court of public opinion, others potentially in courts of law. Realistically we’ll say “thank you for doing that”, offer to help them turn those commitments into reality, and do our best to hold them to it. It doesn’t mean we have to like them period, or stop preparing for them to betray us.

But it’s worth reading the considerations that led Alexander to this. Sometimes the journey, as well as the destination, matters.

Books, etc.

I’ve been putting off reading this because it seemed slightly crackpot, and yet its author seems sane. Fortunately, Diane Coyle (Whom God Preserve) ploughed in and read it for us.

I pounced on the paperback of Reality+ by Dave Chalmers, eager to know what philosophy has to say about digital tech beyond the widely-explored issues of ethics and AI. It’s an enjoyable read, and – this is meant to be praise, although it sounds faint – much less heavy-going than many philosophy books. However, it’s slightly mad. The basic proposition is that we are far more likely than not to be living in a simulation (by whom? By some creator who is in effect a god), and we have no way of knowing that we’re not. Virtual reality is real, simulated beings are no different from human beings.

In the end, though, she recommended the book.

It may be unhinged in parts (like Bing’s Sydney) but it’s thought-provoking and enjoyable. And we are whether we like it or not embarked on a huge social experiment with AI and VR so we should be thinking about these issues.

I still think I’ll pass on the offer.

My commonplace booklet

Also: Toblerone will remove the Matterhorn logo from its packaging as some of the chocolate bar’s production moves from Switzerland to Slovakia. Wonder what mountain they will use then.